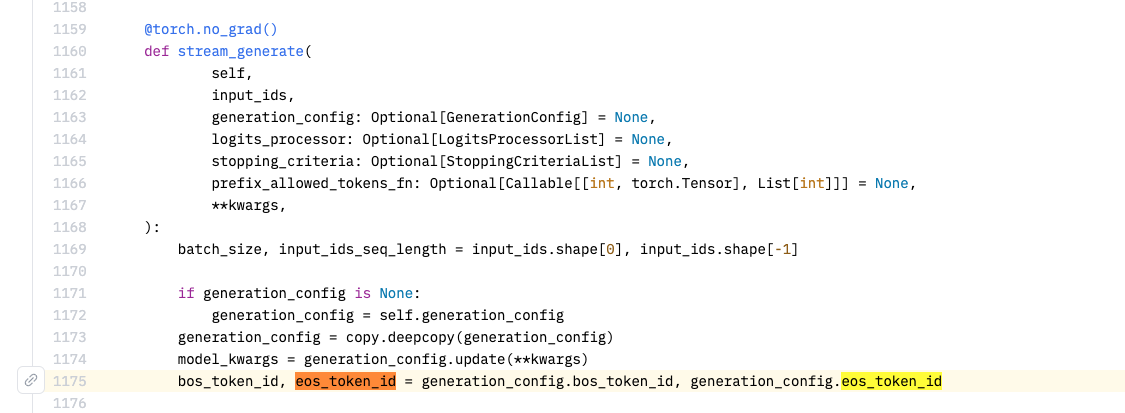

Fix eos_token_id error #67

Closed

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

tokenizer.eos_token_idoutputs 20002, but theeos_token_iddefined in the config.json of ChatGLM-6B is 150005, so it needs to be changed to 150005.{ "_name_or_path": "THUDM/chatglm-6b", "architectures": [ "ChatGLMModel" ], "auto_map": { "AutoConfig": "configuration_chatglm.ChatGLMConfig", "AutoModel": "modeling_chatglm.ChatGLMForConditionalGeneration", "AutoModelForSeq2SeqLM": "modeling_chatglm.ChatGLMForConditionalGeneration" }, "bos_token_id": 150004, "eos_token_id": 150005, "hidden_size": 4096, "inner_hidden_size": 16384, "layernorm_epsilon": 1e-05, "max_sequence_length": 2048, "model_type": "chatglm", "num_attention_heads": 32, "num_layers": 28, "position_encoding_2d": true, "torch_dtype": "float16", "transformers_version": "4.23.1", "use_cache": true, "vocab_size": 150528 }